Why Flow Architectures Will Change How We Approach Systems Availability

Event-driven approaches to integration (such as what I describe in Flow Architectures: The Future of Streaming and Event-Driven Integration) promise to change the way we solve a lot of problems in enterprise applications. Certainly the move to real time integration will change the speed at which we engage customers. However, an engineer named Scott Havens gave an interesting presentation at DevOps Enterprise a few years ago that opened my eyes to another interesting effect.

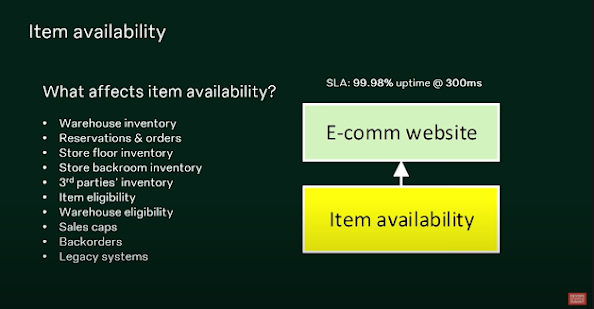

One of the biggest challenges in distributed systems is managing the effects of dependencies on the functionality of the system as a whole. Take, for example, a web application that must return a value calculated from data provided by several external sources. Havens used the example of a retail inventory availability service.

In a traditional service-oriented model, this task would be handled through a number of cascading service calls, starting with the service tasked with returning the final value, followed by calls to each of the external services that are providing relevant data. All of this would be done through request-response APIs (perhaps using REST), and possibly done in a synchronous fashion, meaning the calling service would have to wait for the called service to respond before continuing.

In a traditional (poorly designed) API service, this means that a failure in any of the service dependency calls would result in a failure for the service overall. Availability would then be a function of the availability of each service in the call chain, as noted in Havens's slide below.

That math is expensive. To maintain a reasonable 99.98% uptime (about 56 minutes downtime per year), Havens had to require the calling services to maintain 99.999% uptime (about 5 1/4 minutes downtime per year)—a considerable expense.

Note that call response times have a similar dependency. Each dependency service has to respond quite quickly in order for the availability service to meet its own objective.

Havens then turned his attention to what an architecture looks like in which dependencies stream their data to the availability service as events. He pointed out that a service that collects current state data and retrieves that current state locally is pretty much identical to a lookup table, so why not just use a lookup table? This would mean the only dependency that the availability service would have is a call to a very simple, very available local data set.

Thus, Havens pointed out, the streaming architecture availability service isn't dependent on the services supplying inventory data in order to meet a client request. As you can see, this changes the math completely.

In fact, I would point out that the availability service doesn't even need to know ahead of time of the existence of any given inventory data supplier. By providing an API to which suppliers can send data, this retailer can simply collect relevant data through a stream processor built for that purpose, and update the lookup table as needed.

The result is a much cheaper architecture for achieving the same availability.

Comments

Post a Comment